As if beauty pageants with humans weren’t awful enough. Let’s celebrate simulated women with beauty standards too unrealistic for any real women to live up to!

As if beauty pageants with humans weren’t awful enough. Let’s celebrate simulated women with beauty standards too unrealistic for any real women to live up to!

generating ai images uses less energy than gaming.

On a per use case, you’re probably correct. However mass adoption is being crammed down our throats and up our asses whether we like it or not. Nobody’s forcing anybody to play video games.

right but I was talking about this ai beauty pageant and the hobby of generating ai images in general noone is being forced to do either of those and me making some images uses way less energy than gaming.

What are you defending here?

not really defending anything, I just don’t like when people’s hatred for ai blinds them to the facts. this “ai beauty pageant” probably barely used any energy.

Have you got a source for that?

A quick search suggests that generating an image consumes between 0.01 and 0.29 kWhs (quite a range, so let’s hit the middle and use 0.14kWh), while playing Cyberpunk on a PS5 pulls about 200W, so ~0.2kWh per hour.

Seems pretty comparable, assuming you only generate a few images an hour… but if you were generating dozens, it seems like you’d overtake gaming pretty quickly.

Edit: I apologise, I took the Google summary of an article at face value. Clicking through to the linked article here actually says per 1,000 image generations which is far lower. Urgh, though actually the article also says 0.01 to 0.29 kWh. I’m just going to find another article. 🤦 If you did have a source with numbers, I’d still be interested in seeing it!

Great question! I actually don’t really have an official source, it’s from my own experience.

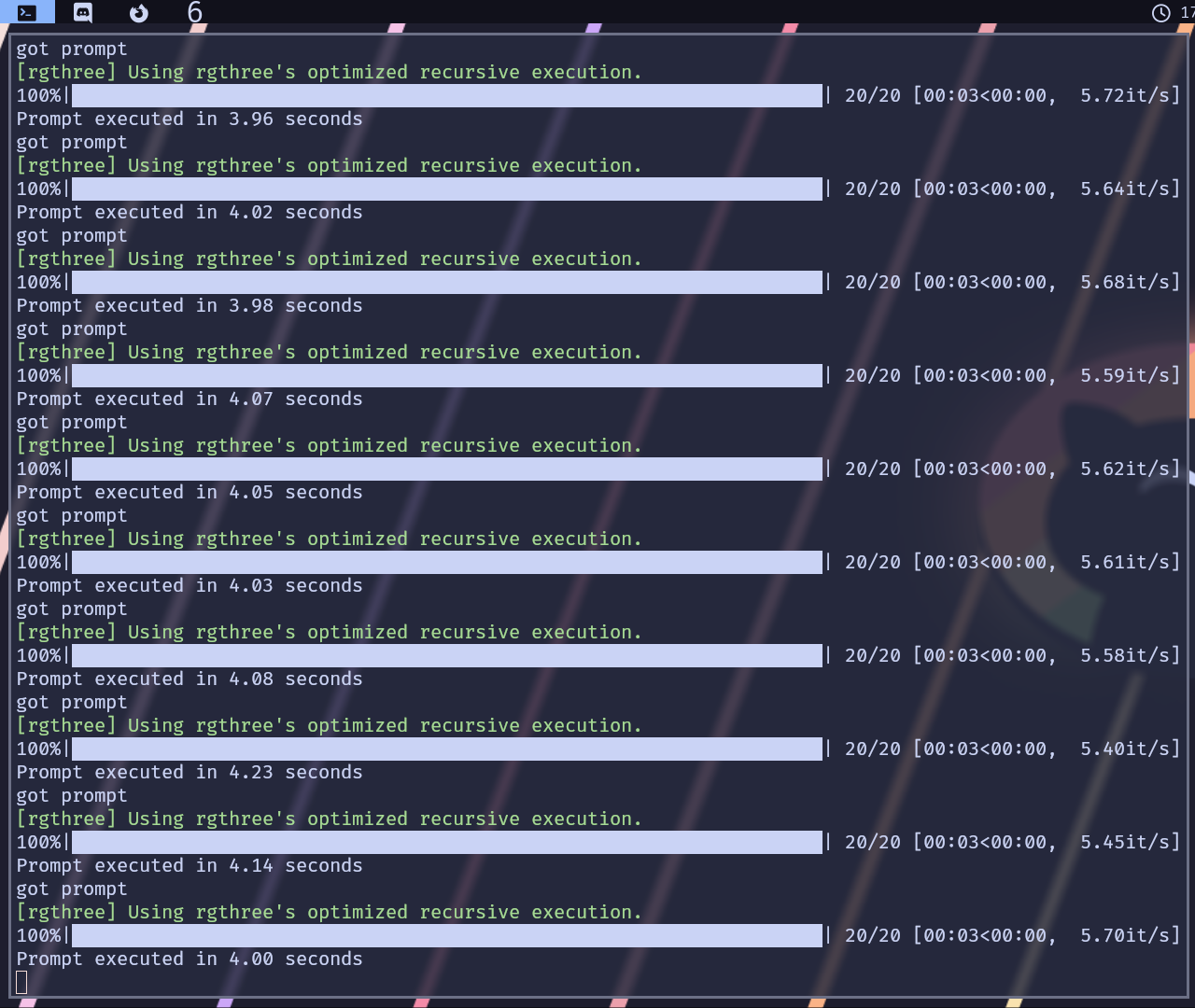

I run ComfyUI locally on my own Laptop and generating an image takes 4 seconds, during which my 3070 Laptop GPU uses 80 Watts (the maximum amount of power it can use). It also fully uses one of the 16 threads of my i7-11800H (TDP of 45W). Let’s overestimate a bit and say it uses 100% of the CPU (even though in reality it’s only 6.25%), which adds 45 watts resulting in 125 watts (or 83 watts if you account for the fact that it only uses one thread).

That’s 125 watts for 4 seconds for one image, or about 0.139 WH (0.000139KWH). That would be 7200 images per KWH. Playing one hour of Cyberpunk on a PS5 would be equivalent to me generating 1440 images on my laptop

“Sources”:

Oh I forgot to add a disclaimer kinda. I use a model based on SD1.5, which is best for generating new images at 768x512 pixels. Upscaling it to 1536x1024 using AI would take about 4 times as long and thus use 4 times as much energy, but you would only upscale an image after having generated many images and getting one you are satisfied with, so I don’t think it makes that big of a difference. Maybe out of 100 pictures I’d upscale one to share it. Though I mostly do AI image generation because I think the technology is really cool. I love toying around with the node system in ComfyUI.