I’m planning on building a new home server and was thinking about the possibility to use disc spanning to create matching disk sizes for a RAID array. I have 2x2TB drives and 4x4TB drives.

Comparison with RAID 5

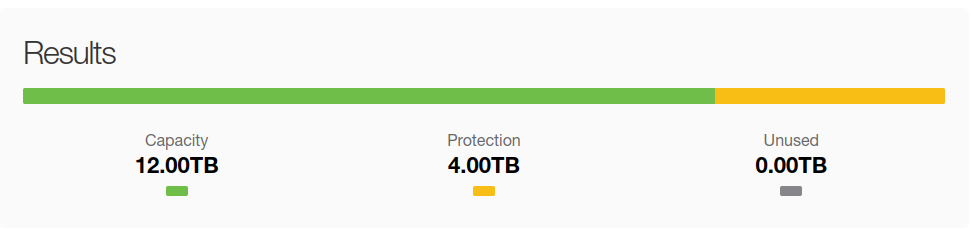

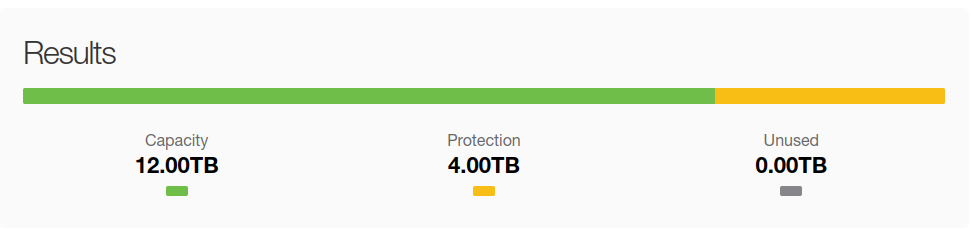

4 x 4 TB drives

- 1 RAID array

- 12 TB total

4 x 4 TB drives & 2 x 2 TB drives

- 2 RAID arrays

- 14 TB total

5 x 4* TB drives

- Several 4TB disks and 2 smaller disks spanned to produce a 4 TB block device

- 16 TB total

I’m not actually planning on actually doing this because this setup will probably have all kinds of problems, however I do wonder, what would those problems be?

Synology has it’s own version of raid5 that can handle your specific disk configuration without any modification:

Not sure if similar things are availible on other platforms.

What are you going to be running on these disks? I haven’t used zfs, maybe it supports mismatched sizes? Or maybe you could do one array with the 4s, another with the 2s, and use LVM to pool them together? Or just keep them separate and fill them up independently.

ZFS doesn’t really support mismatched disks. In OP’s case it would behave as if it was 4x 2TB disks, making 4 TB of raw storage unusable, with 1 disk of parity that would yield 6TB of usable storage. In the future the 2x 2TB disks could be swapped with 4 TB disks, and then ZFS would make use of all the storage, yielding 12 TB of usable storage.

BTRFS handles mismatched disks just fine, however it’s RAID5 and RAID6 modes are still partially broken. RAID1 works fine, but results in half the storage being used for parity, so this would again yield a total of 6TB usable with the current disks.

Typical problems with parity arrays are:

- They suffer from something called “write hole”. If power fails while information is being written to the array, different drives can end up with conflicting versions of the information and no way to reconcile it. The software solution is to use ZFS, but ZFS has a pretty steep learning curve and is not easy to manage. The hardware solution is to make sure power to the array never fails, by using either an UPS to the machine or connecting the drives through a PCI card with a battery, which allows them to always finish write operations even without power.

- Making up a 4 TB out of 2x2 TB is not a good idea, you’re basically doubling the failure probability of that particular “4 TB” drive.

- Parity arrays usually require drives to be all the same size. Meaning that if you want to upgrade your array you need to buy as many drives before you can take advantage of the increased space. There are parity schemes like Unraid that work around this by using only one large parity drive that computes parities across all the others regardless of their sizes; but Unraid is proprietary and requires a paid subscription.

- If a drive fails, rebuilding the array after replacing that drive requires an intensive pass through all the surviving members of the array. This can greatly increase the risk of another drive failing. A RAID5 array would be lost if that occured. That’s why people usually recommend RAID6, but RAID6 only makes sense with 5+ drives.

Unrelated to parity:

- Using a lot of small drives is very power-intensive and inefficient.

- Whenever designing arrays you have to consider what you’ll do in case of drive failure. Do you have a replacement on hand? Will you go out and buy another drive? How long will it take for it to reach you?

- What about backups?

- How much of your data is really essential and should be preserved at all costs?

I ran RAID-Z2 across 4x14TB and a (4+8)TB LVM LV for close to a year before finally swapping the (4+8)TB LV for a 5th 14TB drive for via

zpool replacewithout issue. I did, however, make sure to use RAID-Z2 rather than Z1 to account for said shenanigans out of an abundance of caution and I would highly recommend doing the same. That is to say, the extra 2x2TB would be good additional parity, but I would only consider it as additional parity, not the only parity.Based on fairly unscientific testing from before and after, it did not appear to meaningfully affect performance.

If you don’t need realtime parity, I’ve had no issues on my media server running mismatched drives pooled via MergerFS with SnapRAID doing scheduled parity.

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I’ve seen in this thread:

Fewer Letters More Letters LVM (Linux) Logical Volume Manager for filesystem mapping RAID Redundant Array of Independent Disks for mass storage ZFS Solaris/Linux filesystem focusing on data integrity

3 acronyms in this thread; the most compressed thread commented on today has 17 acronyms.

[Thread #788 for this sub, first seen 5th Jun 2024, 22:35] [FAQ] [Full list] [Contact] [Source code]

If you’re doing weird shit, partition the 4TBs into 2x2TB, now you have 10x2TB. Or use unraid / mergerfs + SnapRaid.

I do not see why it will cause any problems with exception of stacking mapping layer. I wonder can LVM do it natively without adding intermediate block device of 2 x 2G?

deleted by creator