It’s all C++ now, so it doesn’t really need docker! I don’t use docker for any ML stuff, just pip/uv venvs.

You might consider Arch (dockerless) ROCM soon; it looks like 7.1 is in the staging repo right now.

It’s all C++ now, so it doesn’t really need docker! I don’t use docker for any ML stuff, just pip/uv venvs.

You might consider Arch (dockerless) ROCM soon; it looks like 7.1 is in the staging repo right now.

Oh, I forgot!

You should check out Lemonade:

https://github.com/lemonade-sdk/lemonade

It’s supports Ryzen NPUs via 2 different runtimes… though apparently not the 8000 series yet?

Yeah… Even if the LLM is RAM speed constrained, simply using another device to not to interrupt it would be good.

Honestly AMD’s software dev efforts are baffling. They’ve focused on a few on libraries precisely no-one uses, like this: https://github.com/amd/Quark

While ignoring issues holding back entire sectors (like broken flash-attention) with devs screaming about it at the top of their lungs.

Intel suffers from corporate Game of Thrones, but at least they have meaningful contributions in the open source space here, like the SYCL/AMX llama.cpp code or the OpenVINO efforts.

It still uses memory bandwidth, unfortunately. There’s no way around that, though NPU TTS would still be neat.

…Also, generally, STT responses can’t be streamed, so you mind as well use the iGPU anyway. TTS can be chunked I guess, but do the major implementations do that?

Okay. Just because it was proved doesn’t mean they agree.

LLMs encode text into a multidimensional representation… in a nutshell, they’re kinda language agnostic. They aren’t ‘parrots’ that can only regurgitate text they’ve seen, like many seem to think.

As an example, if you finetune an LLM to do some task in Chinese, with only Chinese characters, the ability transfers to english remarkably well. Or Japanese, if it knows Japanese. Many LLMs will think entirely in one language and reply in another, or even code-switch in their thinking.

…Just because it was explained doesn’t mean they agree.

The IGP is more powerful than the NPU on these things anyway. The NPU us more for ‘background’ tasks, like Teams audio processing or whatever its used for on Windows.

Yeah, in hindsight, AMD should have tasked (and still should task) a few engineers on popular projects (and pushed NPU support harder), but GGML support is good these days. It’s gonna be pretty close to RAM speed-bound for text generation.

Ah. On an 8000 APU, to be blunt, you’re likely better off with Vulkan + whatever omni models GGML supports these days. Last I checked, TG is faster and prompt processing is close to rocm.

…And yeah, that was total misadvertisement on AMD’s part. They’ve completely diluted the term kinda like TV makers did with ‘HDR’

You can do hybrid inference of Qwen 30B omni for sure. Or fully offload inference of Vibevoice Large (9B). Or really a huge array of models.

…The limiting factor is free time, TBH. Just sifting through the sea of models, seeing if they work at all, testing if quantization works and such is a huge timesink, especially if you are trying to load stuff with rocm.

I mean, there are many. TTS and self-hosted automation are huge in the local LLM scene.

We even have open source “omni” models now, that can ingest and output speech tokens directly (which means they get more semantic understanding from tone and such, they ‘choose’ the tone to reply with, and that it’s streamable word-by-word). They support all sorts of tool calling.

…But they aren’t easy to run. It’s still in the realm of homelabs with at least an RTX 3060 + hacky python projects.

If you’re mad, you can self-host Longcat Omni

https://huggingface.co/meituan-longcat/LongCat-Flash-Omni

And blow Alexa out of the water with a MIT-licensed model from, I kid you not, a Chinese food delivery company.

EDIT

For the curious, see:

Audio-text-to-text (and sometimes TTS): https://huggingface.co/models?pipeline_tag=audio-text-to-text&num_parameters=min%3A6B&sort=modified

TTS: https://huggingface.co/models?pipeline_tag=text-to-speech&num_parameters=min%3A6B&sort=modified

“Anything-to-anything,” generally image/video/audio/text -> text/speech: https://huggingface.co/models?pipeline_tag=any-to-any&num_parameters=min%3A6B&sort=modified

Bigger than 6B to exclude toy/test models.

The mention of Rhea is cool. These are massive ARM processors with a lot of SIMD, and HBM and DDR5 hanging off them:

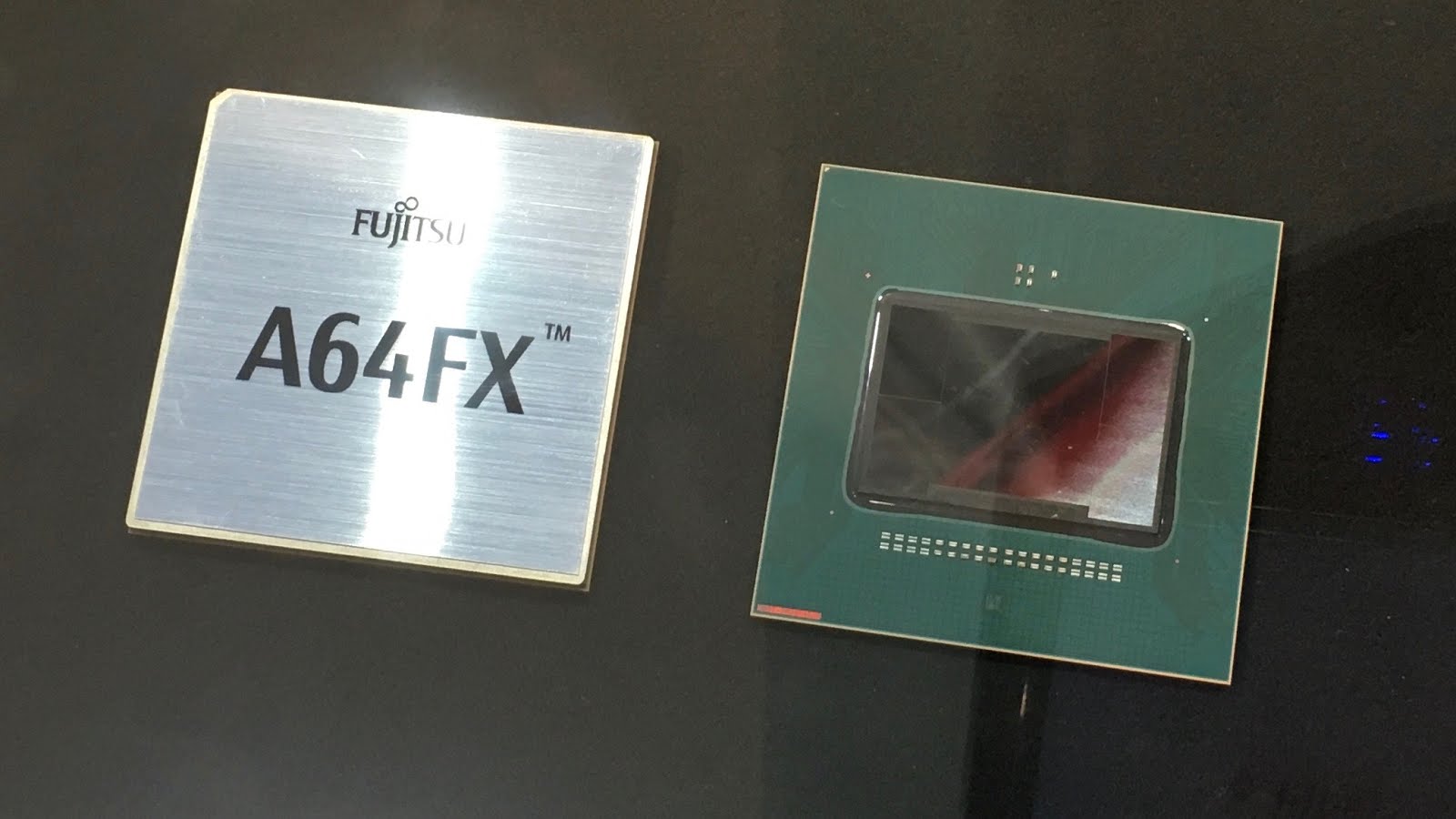

Kinda like Fujitsu’s mad (but now old) ARM A64FX:

The links I’m finding aren’t working now, but the US Dept of Energy ported code to them for a paper, and apparently loved that kind of architecture compared to GPUs and traditional CPUs: https://www.olcf.ornl.gov/2020/11/10/olcf-to-trial-long-awaited-fujitsu-processors-in-arm-based-test-bed-system/

Heh, Tom’s is a bad source:

Alice Recoque will be co-funded with a total budget of around EUR 355 million covering the acquisition, delivery, installation, and maintenance of the system. The EuroHPC JU will finance 50% of the total cost through the Digital Europe Programme (DEP), while the remaining 50% will be funded by France, the Netherlands and Greece within the Jules Verne consortium.

https://old.reddit.com/r/opensource/comments/1kfhkal/open_webui_is_no_longer_open_source/

https://old.reddit.com/r/LocalLLaMA/comments/1mncrqp/ollama/

Basically, they’re both using their popularity to push proprietary bits, which their devleopment is shifting to. They’re enshittifying.

In addition, ollama is just a demanding leech on llama.cpp that contributes nothing back, while hiding the connection to the underlying library at every opportunity. They do scummy things like.

Rename models for SEO, like “Deepseek R1” which is really the 7b distill.

It has really bad default settings (like a 2K default context limit, and default imatrix free quants) which give local LLM runners bad impressions of the whole ecosystem.

They mess with chat templates, and on top of that, create other bugs that don’t exist in base llama.cpp

Sometimes, they lag behind GGUF support.

And other times, they make thier own sloppy implementations for ‘day 1’ support of trending models. They often work poorly; the support’s just there for SEO. But this also leads to some public GGUFs not working with the underlying llama.cpp library, or working inexplicably bad, polluting the issue tracker of llama.cpp.

I could go on and on with examples of their drama, but needless to say most everyone in localllama hates them. The base llama.cpp maintainers hate them, and they’re nice devs.

You should use llama.cpp llama-server as an API endpoint. Or, alternatively the ik_llama.cpp fork, kobold.cpp, or croco.cpp. Or TabbyAPI as an ‘alternate’ GPU focused quantized runtime. Or SGLang if you just batch small models. Llamacpp-python, LMStudo; literally anything but ollama.

As for the UI, thats a muddier answer and totally depends what you use LLMs for. I use mikupad for its ‘raw’ notebook mode and logit displays, but there are many options. Llama.cpp has a pretty nice built in one now.

Have not played it yet, but for me, personally, E33 looks like one of those “better to watch the cutscenes on YouTube” games.

I bounced off Witcher 3 too. Watched friends play a lot of RDR2, not interested.

…BG3 was sublime though. I don’t even like D&D combat, or ‘Tolkien-esque’ fantasy, but holy hell. It’s gorgeous, it just oozes charisma, and was quite fun in coop.

Open WebUI isn’t very ‘open’ and kinda problematic last I saw. Same with ollama; you should absolutely avoid either.

…And actually, why is open web ui even needed? For an embeddings model or something? All the browser should need is an openai compatible endpoint.

Yeah that’s really awesome.

…But it’s also something the anti-AI crowd would hate once they realize it’s an 'LLM" doing the translation, which is a large part of FF’s userbase. The well has been poisoned by said CEOs.

I mean, there are literally hundreds of API providers. I’d probably pick Cerebras, but you can take your pick from any jurisdiction and any privacy policy.

I guess you could rent an on-demand cloud instance yourself too, that spins down when you aren’t using it.

That’s community’s mods are super nice. Probably too nice TBH.

…But yeah. Follow community rules, or post elsewhere. What is so hard about that?